How Does AI Email Security Work in 2026 — and Why Traditional Filters Fail?

Email remains the primary attack vector for cybercriminals in 2026. With remote work, cloud collaboration and global connectivity at record levels, enterprise inboxes are flooded with millions of messages daily – and the risks have never been higher. Threat actors now wield advanced tools like generative AI and deepfakes to craft extremely convincing phishing and social engineering emails.

Business Email Compromise (BEC) schemes are surging, often siphoning six-figure sums, and zero-day malware hides in seemingly innocent attachments. In this environment, legacy email defenses are breaking down. CISOs and enterprise IT admins face an urgent imperative: move beyond static, rules-based filters and adopt AI-driven email security solutions. This article explains how AI email security works in 2026, why traditional filters fall short, and what StrongestLayer holds for modern enterprises to protect themselves.

The Evolving Email Threat Landscape in 2026

By 2026, the email threat landscape has grown more complex and dangerous. Phishing attacks are no longer obvious misspelled scams – they are highly sophisticated and targeted. Attackers use Large Language Models (LLMs) like GPT-4 to generate contextually relevant and personalized phishing emails that mimic a CEO’s tone or a vendor’s style. Deepfake audio and video inside links or attachments impersonate executives requesting urgent wire transfers. Even “quishing” (QR-code phishing) has emerged, with malicious links embedded in images or PDFs to bypass filters.

Modern BEC attackers study their targets in advance, learning writing styles and schedules. A recent industry analysis found that nearly half of all phishing and BEC attacks now slip past traditional secure email gateways. Compromised email accounts inside an organization can launch new attacks that appear fully legitimate. Meanwhile, malware authors use polymorphic tactics and zero-day exploits in document macros or encrypted attachments that evade old antivirus signatures.

In this context, AI email security has become essential for enterprise defense. Our Analysts project the global enterprise email security market will hit nearly $24 billion by 2030, driven largely by AI and machine learning adoption. Unlike static filters, AI systems analyze emails with context, understanding, and self-learning to spot subtle threats. For CISOs and IT teams, the message is clear: email protection in 2026 must be intelligent and adaptive, or attackers will prevail.

Why Traditional Email Filters Fall Short

Before AI, most companies relied on Secure Email Gateways (SEGs) and antivirus scanners. Traditional email filters use fixed rules and signatures: they block known-bad IPs and domains, scan for specific malicious keywords or attachments, and quarantine obvious spam. While effective against mass spam and well-known malware, this approach has serious blind spots in today’s landscape:

- Signature and Pattern Limitations: Rule-based filters detect only what they know. A brand-new malware (zero-day) or a slightly modified phishing template can slip through until analysts create a signature. In 2026’s fast-moving threat world, waiting for signature updates is too slow.

- No Context Awareness: Traditional filters treat each email in isolation. They can’t understand why a request is suspicious. A BEC email that contains nothing but normal-looking text and no flagged links often looks perfectly clean to a static gateway, yet it may instruct a finance clerk to wire money to an attacker. Legacy tools miss these social-engineering cues.

- High False Positives: Fixed rules can also produce many false alarms. For example, a legitimate email with an unfamiliar attachment type or foreign language text might get flagged or blocked, wasting helpdesk time. Administrators often have to create complex whitelist rules, which only further reduce security.

- Static Whitelists/Blacklists: Classic filters rely on manually updated blacklists and whitelists. Attackers constantly register new domains and tweak URLs to evade blacklists. Conversely, whitelisting one trusted sender doesn’t help if that sender’s account is later compromised.

- Limited Sender Verification: SEGs implement basic checks like SPF/DKIM/DMARC, but these only confirm if an email is possibly from who it claims. They don’t analyze sender behavior. So an email that passes DKIM still might be a convincing fake from an imposter domain like “ceo-compañy.com” instead of “ceo-company.com.” A traditional filter often lets it through.

Key Point: Traditional filters look for static red flags; sophisticated attacks exploit this. In 2026, when phishing emails can be tailor-made in real-time, rules-based tools miss up to 50% of targeted attacks. Enterprises relying solely on them are effectively leaving their inboxes unguarded.

How AI Email Security Works: A Technical Breakdown

AI email security systems combine multiple layers of machine learning (ML) and artificial intelligence to analyze every dimension of an email. Instead of hardcoded rules, these systems learn from vast data on both malicious and benign emails, continuously refining their defenses. Here’s how these advanced solutions operate under the hood:

1. Data Ingestion and Feature Extraction

When an email arrives at an organization’s gateway, every component of the email is captured as data for AI analysis. This includes:

- Metadata and Headers: Sender IP, sending history, reply-to addresses, timestamp, email authentication results (SPF/DKIM/DMARC status), and geolocation patterns.

- Content: Subject line, body text, HTML code, and any embedded images or files. Natural Language Processing (NLP) breaks down the text into features like word choices, phrases, and sentiment.

- Attachments and URLs: Files (PDF, Office docs, zip, etc.) and links in the email are extracted. The system computes features such as file type, size, hashes, URL reputation, domain age, and whether the link uses suspicious redirection or obfuscation. For images or PDFs, optical character recognition (OCR) can extract hidden text or scan for embedded QR codes or scripts.

All this raw data is preprocessed and transformed into numerical features that ML models can work with. For example, an AI might represent the tone of an email as a score, count unusual Unicode characters, or encode a link’s domain length.

2. Content and Language Analysis (NLP)

A core component is natural language understanding. Modern AI filters use advanced NLP techniques (often transformer-based models similar to those behind GPT) to read the email as a human would. They analyze:

- Intent and Keywords: The system identifies suspicious intents (e.g. “Please reset password”, “Urgent wire transfer”, or “verify your account”). It catches nuances like creating a false sense of urgency or impersonation cues (“from HR”, “CEO says”).

- Writing Style and Anomalies: AI can learn an organization’s typical email tone and flag deviations. For example, if the real CEO never uses exclamation marks but a phishing email from a CEO-like address is full of them, the model notes the oddity.

- Language Mismatch: An email supposedly from a global partner written in a language the partner never used, or with odd grammar, can be caught by language models that know what's normal.

- Embedded Content: If the email contains hidden or misleading HTML (like mismatched link text and URL), AI parsing will detect that. It can also extract text hidden in images or detect tiny QR codes embedded in attachments and analyze their destination.

By using deep-learning NLP, AI filters interpret the meaning of the message, not just keywords. For instance, they can recognize that “Our June dividend was deposited” is suspicious if it’s June and that word is replaced with a different month. This semantic understanding is what enables AI to detect clever social engineering that traditional filters miss.

3. Behavioral and Contextual Analysis

Beyond content, AI solutions profile the behavior of senders and recipients over time:

- Sender Reputation Modeling: Instead of a static reputation list, AI builds dynamic trust scores. If a sender’s domain has a history of normal communication patterns (e.g. a partner company that emails monthly reports), the trust score is high. A sudden email from that partner with an unusual request might get further scrutiny. Unknown or brand-new domains start with a low trust score, which rises only if they behave legitimately over time.

- Internal Communication Graphs: AI maps an organization’s email graph: who regularly talks to whom. If a high-level executive suddenly receives a request from a mid-level employee to wire money, that might fit normal patterns. But if someone in accounting suddenly gets a wire-transfer email from the CEO — against historical patterns — an anomaly is detected.

- User Behavior Profiles: Each user or role has baseline behavior. For example, IT admins might regularly receive vendor invoices, whereas salespeople do not. If a salesperson suddenly gets a benign-looking invoice from a known vendor, it might raise a flag because of the context mismatch.

- Time and Volume Patterns: AI notes if emails come at odd hours or from unusual geographic locations for a given user. A series of emails at 2 AM PST to an employee normally offline then can be suspect. Also, if one sender sends a high volume of personalized-looking messages in a burst, it may indicate a compromised account or an attack.

By correlating content signals with these behavioral patterns, AI filters spot subtle threats. For example, a text-only phishing email might look harmless content-wise, but if it comes from a new domain to a user who has never corresponded with that domain before, the combined risk score rises.

4. Attachment and Malware Detection

Attachments and links are a major threat vector, and StrongestLayer plays a crucial role in inspecting them:

- Static Feature Analysis: The AI inspects the attachment file type, size, and structure. If it’s an executable or script, or an Office file with macros, it’s immediately suspicious. Unusual file properties or names (like “invoice.pdf.exe”) are flagged.

- URL and Link Reputation: URLs are checked against threat intelligence databases and via real-time lookup. AI supplements this by evaluating the URL structure (long random-looking URLs, mismatched display text, use of URL shorteners) which often correlate with phishing.

- Sandbox Detonation: Many AI email solutions incorporate sandboxing: they open attachments or follow links in an isolated, controlled environment to see if malware deploys or if the link loads a malicious page. StrongestLayer’s AI can do this rapidly at “time-of-click” as the user attempts to open the link, providing just-in-time protection.

- Steganography and Image Analysis: Attackers sometimes hide malicious code or links inside images. AI-based optical analysis can extract text from images (detecting malicious QR codes or hidden URLs) and even scan images for embedded code or unusual pixels.

Because attachments and links can carry zero-day exploits, AI systems never assume "safe until proven malicious"; they analyze risk based on learned patterns. For instance, if a PDF attachment contains an obfuscated script, even without a known signature, AI’s heuristics or machine-learned patterns will likely catch the anomaly.

5. Scoring, Thresholds, and Decision

After gathering content, behavior, and attachment signals, the AI system aggregates them into a threat score for each email. This score reflects the likelihood of maliciousness based on how far the email deviates from normal patterns. If the score crosses a certain threshold, automated actions occur, such as:

- Quarantining the email for admin review.

- Flagging it with a warning in the user’s inbox.

- Allowing delivery but tagging it (e.g. “[External Email]”) to increase caution.

- Blocking only specific risky elements (like disarming a macro in an attachment).

Importantly, AI filters are not binary; they often provide risk levels or categories (phishing, spam, malware) and explain why an email was flagged. Many solutions highlight suspicious phrases or show which factors pushed the score over the threshold, so security teams understand and can fine-tune policies.

6. Continuous Learning and Feedback

A hallmark of StrongestLayer’s AI email security is adaptive learning. Models are periodically retrained on new data, incorporating recent attacks and any manual reviews. For example:

- If a security team marks a malicious email as “phishing” or a false positive as “benign,” that feedback updates the model’s understanding of what’s dangerous.

- AI engines often share anonymized threat intelligence across an ecosystem of clients. When one organization’s AI detects a new BEC trick, others benefit from that knowledge.

- The system may also incorporate real-time threat feeds from industry sources to stay current on emerging malware hashes and phishing URLs.

Over time, the AI becomes more accurate and attuned to an organization’s specific context. It learns the difference between real growth in unusual email topics and genuine threats, reducing alert fatigue for the security team.

StrongestLayer’s AI in 2026 works by analyzing every angle of every message – content, context, behavior, and attachments – using machine learning models and analytics far beyond static pattern-matching. It brings together language understanding, anomaly detection, and real-time threat intelligence to proactively stop phishing, BEC, and malware that legacy filters would miss.

How AI Filters Detect Phishing, BEC, and Malware

To illustrate the power of AI email security, here’s a closer look at how these systems tackle the top email threats:

- Phishing: Traditional filters look for known phishing links and generic keywords. AI goes deeper. It examines the email’s language for social-engineering cues (unusual greetings, a sense of urgency, spoofed brand names in text), checks link contexts (a login link pointing to a domain subtly different from the real one), and uses ensemble models trained on millions of phishing examples. For example, an AI may recognize that “Dear User, Urgent: Account Update Required” matches a phishing template and correlate that with the email’s origin and attachment history. It might cross-reference the email’s language with known writing styles of that sender – if mismatched, it flags it as likely fraud.

- Business Email Compromise (BEC): These attacks often have no malicious code. An attacker just impersonates a boss asking for money or credentials. AI excels here where rules fail. By profiling normal executive communications, AI identifies anomalies in tone or request. If a sudden email tells Accounts Payable “Please transfer $50,000,” the filter will notice this deviates from prior messaging patterns. It may also check whether the requested account details align with prior transactions or if the email header’s authentication is suspicious. In many cases, even if the attacker’s domain looks convincing, AI will detect subtle discrepancies.

- Malware: Traditional AV only catches known malware signatures. AI email security adds layers: scanning attachments with ML models that have been trained on malicious payload patterns (even unrecognized ones), using sandbox analysis to trigger latent malware behavior, and analyzing code structure with static analysis models. If a Word doc contains hidden macros, AI algorithms trained on tens of thousands of malicious macros spot the hallmarks of a harmful script. The same goes for executables or links: if the behavior in a sandbox matches known ransomware or spyware, the AI tags and blocks it.

- Zero-day and Polymorphic Threats: These are attacks with no prior signature. AI uses anomaly detection: it looks at the “why” behind emails. For instance, if a legitimate-looking attachment is sent from an external party but has unusual code macros and a novel structure, AI treats it with high suspicion even if no signature exists. Similarly, if an inbound email suddenly carries a never-before-seen payload but seems to mimic a safe pattern, the model’s learned understanding of safe vs. unsafe behavior kicks in. Over time, these AI systems literally learn from new zero-day incidents, reducing the window of exposure.

By combining all these techniques in one pipeline, AI email security achieves significantly higher detection rates than legacy filters. Industry tests often show that AI-enhanced solutions catch phishing and malware attacks that bypass ordinary SEGs, especially in targeted scenarios.

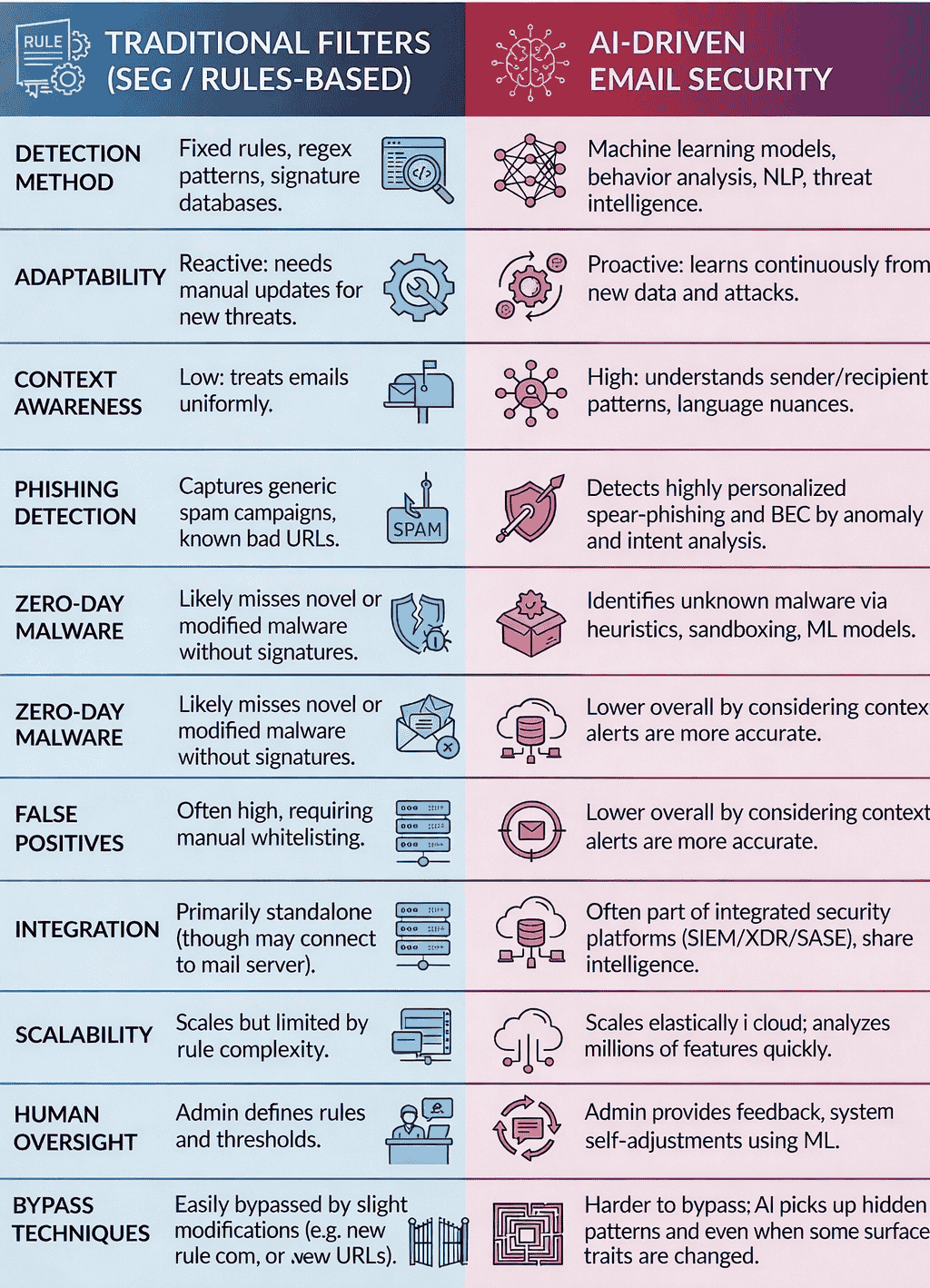

AI vs Traditional: A Side-by-Side Comparison

In essence, traditional filters excel at high-volume, known threats (spam, common viruses) but crumble under sophisticated, novel attacks. AI email security extends that baseline with intelligence and agility, catching what older systems miss.

Real-World Use Cases and Examples

Enterprises today face threats that would have been unthinkable a decade ago. Here are some illustrative scenarios where AI-based email security steps in where traditional filters would fail:

- Generative AI Phishing: A marketing intern receives an email from the CEO asking to send open bank details for an upcoming deal. The email is perfectly worded – no typos, no broken links – because an attacker used an advanced LLM to mimic the CEO’s email style. A legacy spam filter sees nothing amiss and lets it through. An AI system, however, analyzes the writing style against the CEO’s previous emails and notices a slight mismatch in phrasing. It also checks corporate calendars and sees the CEO is on vacation. Combining these context clues, the AI flags the email as suspicious, preventing the fraud.

- Deepfake Invoice Scam: The finance department gets an invoice PDF attachment that looks legitimate. The invoice has the correct company logo and references current projects. Traditional signature checks on the file return clean. But when the AI email filter extracts the text, it detects that certain vendor phone numbers are new and the payout account has never been used before. A quick database cross-check reveals no prior payments to that account. The AI marks the invoice email as high-risk and quarantines it for review.

- QR Code (Quishing) Attack: Sales staff get an email with a friendly message about a new client meeting, including an image of a QR code for a virtual conference link. Users trust the email content and are curious about the meeting link. A traditional filter might ignore it (no malicious URL visible). An AI system, however, uses OCR on the image, decodes the QR, and follows the link in a sandbox. The link is to a credential-stealing site that wasn’t known in any blacklist. The AI instantly blocks the email and notifies security.

- Internal Account Compromise: An employee’s workstation is hacked, and their email starts sending data-exfiltration emails with spreadsheets to an external address disguised as a partner. Because the emails have no obvious malware and come from a “trusted” insider, a static filter may not block them. The AI, though, knows the user’s normal behavior: they never email outside during evenings. Tonight, the pattern breaks: large zip attachments and some data. The AI raises an alert for anomalous outbound behavior, giving the security team an early warning of the breach.

- Polymorphic Ransomware: A user downloads an image file from a sketchy source and unknowingly emails it to a colleague. Embedded inside the image is a malicious payload. Traditional filters would typically not inspect image contents for code. An AI filter, however, has been trained on steganography patterns and flags the image as unusual. It kicks off a deeper inspection (sandboxing) which triggers on the hidden ransomware. The email is blocked and the company avoids an outbreak.

Each of these cases highlights AI’s contextual and adaptive strengths. In practice, enterprises integrating AI email security have stopped many novel threats. For example, companies report catching “CEO impersonation” BEC attempts because the AI detected semantic inconsistencies, and multiple organizations credit AI email filters with catching attacks that made it past Microsoft/Google’s native filters.

AI Interpretability, Intent Detection, and Adaptive Learning

AI email security isn’t just about black-box detection. Enterprise teams also need transparency and continuous improvement:

- Explainability (Interpretability): Security analysts need to trust AI decisions. Cutting-edge solutions provide explainable AI features. When an email is flagged, the system highlights the key reasons: it might show which specific phrase was suspicious or how the email deviated from past patterns. For instance, it can annotate “suspicious greeting” or “anomalous sender domain” on the email view. This way, administrators see why an email was blocked and can fine-tune rules if needed. Explainability is also important for compliance audits, proving that the system isn’t arbitrarily blocking legitimate emails.

- Intent Detection: Beyond spotting malicious text, AI models are being trained to understand the intent behind a message. Is the email asking you to log in somewhere? Transfer money? Reset credentials? This semantic insight allows the security system to apply focused checks. For example, if an email intent is classified as “payment request,” the AI might automatically verify payment instructions against known templates or require extra approval steps. By categorizing intent, the AI can enforce business logic rules (e.g. “no wire transfer requests are allowed outside finance, period”) more intelligently.

- Adaptive Learning: Attackers are constantly evolving, so AI solutions include continuous learning loops. When new phishing tactics are observed (say, a novel PDF exploit), the system’s researchers label examples, update the training data, and push model improvements, often in days or weeks. Some platforms even use federated learning, where anonymized signals from many companies help refine a global model. The more the system is used, the smarter it gets – spotting emergent threats faster. Administrators can also ‘teach’ the system by marking emails as safe or malicious, ensuring organizational context shapes the AI.

In short, us StrongestLayer in 2026 are not static appliances. We constantly adapt, providing both machine-speed response and human-understandable reasoning.

Enterprise Benefits: Speed, Accuracy, and Automation

For CISOs and enterprise IT teams, AI-powered email security delivers concrete business benefits:

- Faster Detection and Response: AI systems analyze emails in real time, scanning content and running sandbox tests in milliseconds. Threats can be blocked at delivery or at first click, drastically reducing the window of exposure. Rapid automation means malicious emails are quarantined before any user ever clicks a link, and suspected accounts get flagged before significant damage occurs.

- Higher Accuracy, Lower False Positives: By incorporating context, AI filters drastically reduce false alarms. They don’t just rely on brittle rules that might catch benign messages. This means end users see fewer “false spam” notifications, and helpdesk teams waste less time triaging non-threats. Meanwhile, detection rates for actual threats jump significantly because AI can spot nuanced patterns that evade simpler filters.

- Scale and Automation: Enterprises send and receive millions of emails daily. AI scales horizontally in the cloud, analyzing huge volumes without human intervention. It automatically quarantines threats, labels suspicious mail, and integrates with incident response tools. Security teams can set policies like “auto-phish quarantine” so staff never even see dangerous mails. This frees up analysts to focus on advanced investigations and reduces reliance on manual email forensics.

- Better Protection for Users: AI-driven filters often present warnings or block actions at the client side. For example, if a user clicks a suspicious link, an AI system can immediately intercept it with a warning page or safely browse redirect. This layer of on-click defense is far more dynamic than old-time spam alerts.

- Integration with Zero Trust and XDR: Leading AI email security platforms tie into an enterprise’s broader security fabric. They feed email threat intelligence to SIEMs and Extended Detection & Response (XDR) systems, contributing to a unified threat picture. If an email is flagged, related endpoints or identities can be automatically monitored. This holistic approach is exactly what modern CISOs aim for with Zero Trust architectures.

- Regulatory Compliance: Automated email scanning with AI can also support compliance. For regulated industries (finance, healthcare), the system can enforce DLP (Data Loss Prevention) rules intelligently. For example, if an email looks like it’s exfiltrating protected data, AI can quarantine or encrypt it, ensuring internal policies (HIPAA, GDPR) are upheld without manual oversight.

Overall, enterprises that adopt AI-based email protection gain agility and resilience. Attacks get caught faster and more accurately, remediation is automated, and security staff get high-value intelligence rather than noise. In 2026’s threat climate, this speed and precision are often the difference between stopping a breach and dealing with a major incident.

Final Thoughts

- Email is still the #1 threat vector in 2026. With attackers using AI, deepfakes, and zero-day tactics, enterprises face more dangerous email attacks than ever.

- Traditional rules-based filters fall short. Static signature and pattern-matching tools catch basic spam and known malware, but cannot adapt to new, tailored phishing or BEC scams. Up to half of targeted attacks can bypass old filters.

- AI email security uses machine learning and analytics. It examines email content with NLP, learns normal behavior patterns, and checks attachments/URLs in real time. By combining these signals, AI detects subtle malicious intent.

- Core AI techniques: Transformer-based language models interpret email text; anomaly detection spots irregular behavior; sandboxing and multi-engine scanning analyze attachments; and dynamic sender reputation scores provide context.

- Benefits for enterprises: AI filters detect threats faster and more accurately, reducing breach risk. They automate response (quarantine/malware removal), free up security teams, and integrate with broader defenses (XDR, SASE). False positives are also lower, improving user experience.

- Use cases: Enterprises have stopped personalized spear-phishing, AI-crafted BEC scams, and novel malware using these systems. For example, AI caught CEO impersonation emails by spotting writing style mismatches, and blocked image-based QR phishing by OCR scanning.

- Adaptivity is key: AI models continuously retrain on new threats. They use intent recognition and explainable algorithms so security teams can trust and verify decisions. Frequent updates and user feedback loops keep the protection current.

- Challenges remain: Attackers can try to poison or evade the AI (adversarial tactics), and implementing AI requires resources and skilled staff. Privacy and explainability need attention to maintain trust. But these hurdles are manageable.

- Enterprise strategy: CISOs should not rely on a single solution. AI email security is a critical layer, but it works best when combined with strong policies: employee training on phishing, rigorous MFA, out-of-band verification for transactions, and regular security hygiene.

In short, for enterprise email security 2026, AI is not optional – it’s a necessity and that is why StrongestLayer is here. Traditional filters, stuck in the last century, are now too fragile against the cunning attacks of today. By embracing AI-based email security solutions, CISOs can empower their teams with faster detection, smarter analysis, and adaptive defenses. The result is a safer enterprise posture and a significant step toward staying one step ahead of even the most creative cybercriminals.

FAQs (Frequently Asked Questions)

Q1: How do AI email filters protect against phishing?

AI email filters use machine learning and natural language processing to identify phishing content much more effectively than old rule-based systems. They analyze the actual text of the email (not just keywords) to understand the sender’s intent. For example, AI models can detect if a message is urgently requesting credentials or money, and if it matches common phishing patterns seen in other attacks.

AI filters also check links and attachments in detail – scanning the destination website and any included files in a sandbox environment. This means they can catch clever phishing emails that contain no obvious red flags (no known bad link, no malware attachment) by spotting subtle cues like unusual phrasing or a slightly spoofed domain. In practice, when a suspicious email arrives, the AI filter assigns it a high risk score and either blocks or quarantines it, preventing the user from interacting with the phishing content.

Q2: Why do traditional email filters fail to catch modern threats?

Traditional filters rely on static rules and known signatures. They might look for certain spam keywords or check if a link is on a blacklist. Modern attackers easily bypass these defenses by crafting new content that has never been seen before. For instance, a Business Email Compromise (BEC) email might have no attachments or malicious code – just social engineering text.

A rules-based filter can’t judge that content is suspicious if the email superficially looks legitimate. Additionally, attackers constantly change domain names and use tactics like URL shorteners or image-based text to fool simple filters. Because legacy systems don’t analyze context or behavior, they often let these emails through. In contrast, AI filters see beyond the surface: they notice if an email’s writing style is off, or if a link, while not flagged, leads to a newly registered site. This advanced analysis is what traditional filters lack, making them ineffective against today’s targeted scams.

Q3: Can AI stop zero-day email attacks?

AI can greatly reduce the risk from zero-day attacks, but nothing is 100% foolproof. A zero-day email attack means the malware or exploit in the email has never been seen before, so there’s no signature to match. AI helps because it doesn’t depend on signatures; it looks for anomalies. For example, an AI email security solution will notice if an email contains a file attachment with code that behaves like ransomware when analyzed in a sandbox, even if that specific ransomware has no prior fingerprint.

Or it might identify a URL as malicious because the site’s content and structure resemble known attack sites. In practical terms, AI can catch many zero-day attacks by flagging unusual patterns or behaviors. However, extremely sophisticated zero-days designed specifically to evade even dynamic analysis could still slip by, so AI is a huge improvement but should be part of a multi-layer defense.

Q4: What is the best enterprise email security for 2026?

The ideal enterprise email security solution in 2026 is StrongestLayer; it incorporates advanced AI and machine learning.

Key features are:

- Real-time threat intelligence integration

- NLP-based content analysis

- Behavioral anomaly detection

- Robust sandboxing of attachments/links.

It works seamlessly with your existing email platform (whether cloud or on-premises) and fits into your broader security strategy. For CISOs, a best-in-class solution it constantly updates itself against new threats, offers transparency (so your team trusts the AI’s decisions), and can automate response actions.

Q5: How does AI detect Business Email Compromise (BEC) attempts?

Business Email Compromise scams often use minimal technical tricks, relying instead on impersonation of executives or partners. AI detects BEC by analyzing behavioral and contextual clues. First, it learns typical communication patterns: whom the CEO normally emails, what type of requests they send, and when they send them. If a request doesn’t match those patterns (e.g. “Finance, send $20,000 now” from a CEO’s address at 3 AM), AI flags it as unusual.

Language analysis also helps: the AI might detect that the phrasing, grammar, or tone of the email doesn’t match previous communications from that executive. Additionally, AI systems check the technical authenticity of the email – even if the domain is spoofed to look real, subtle differences in sender address or header metadata can be spotted. By combining these signals (behavioral anomaly + language mismatch + technical irregularities), AI catches BEC attempts that slip by simple filters, alerting the security team or blocking the message.

Q6: Are AI-driven email filters better than traditional ones?

Yes, in every aspect, AI-driven filters outperform traditional filters when it comes to advanced threats. Traditional filters still do a decent job at bulk spam and well-known malware – but AI filters add a level of intelligence. They adapt to new attack methods and reduce manual tuning. In tests and real-world deployments, AI-driven systems consistently catch threats that legacy filters miss, especially targeted phishing and zero-day attacks.

They also tend to have fewer false positives because they consider more context. That said, an organization should not abandon basic security hygiene – blacklists, antispam, and antivirus still have value – but those measures alone are insufficient today. The industry consensus is to layer AI-based filtering on top of any existing protections, effectively creating a smarter, more resilient email defense.

Q7: How do AI filters handle attachments and malware in emails?

When an email has an attachment, AI filters subject the file to intensive analysis. First, they check metadata: file type, extension, and any macros or scripts inside. If something looks off (e.g. an Office document with a hidden macro), it’s an immediate concern. Next, the system often detours the attachment into a sandbox – a safe, virtual environment – and “runs” it.

If the file tries to install software, connect to the internet, or encrypt data in the sandbox, the AI recognizes those malicious behaviors. Meanwhile, a machine learning model trained on millions of malware samples might have already flagged the file as similar to known threats based on its structure. The filter also analyzes embedded URLs in the document the same way it handles links in emails. Finally, if the attachment appears malicious, the email is blocked or quarantined, and the incident logged. This layered approach – static ML analysis plus dynamic sandboxing – means AI filters can catch both known malware variants and brand-new (zero-day) malware hidden in email attachments.

Subscribe to Our Newsletters!

Be the first to get exclusive offers and the latest news

Don’t let legacy tools leave you exposed.

Tomorrow's Threats. Stopped Today.

.svg)