Why Pattern-Matching is Mathematically Obsolete in the AI Era

For two decades, email security relied on a simple premise: attackers repeat themselves. Build a database of malicious patterns, train ML models to recognize them, block anything that matches. This approach powered Proofpoint, Mimecast, Barracuda, and the entire Generation 2 security market.

Then Large Language Models broke the model. Not as a feature gap that can be patched—as a mathematical impossibility.

The Infinite Variation Problem

Pattern-matching works when adversaries have constraints. Human attackers copying templates created recognizable patterns: same phrases ("urgent wire transfer"), similar grammar mistakes, predictable link structures. Train a model on these patterns, catch 90% of attacks.

LLMs eliminated those constraints entirely. An attacker can now generate unlimited unique variations of the same attack—same malicious intent, completely different execution every single time. Your ML model trained on 10,000 historical phishing emails is looking for fingerprints that no longer exist.

This isn't theoretical. In StrongestLayer's production environment, we're seeing AI-generated BEC attacks with perfect grammar, context-aware personalization referencing real organizational details, writing style mimicry matching executives' actual patterns, and dynamic social engineering adapting to recipient roles. Each email is unique. Pattern-matching has nothing to match against.

The Context Collapse

Here's where it gets worse: pattern-matching treats every email as an isolated artifact. But modern AI-generated attacks are contextually sophisticated in ways that require understanding the relationship between sender and recipient.

Example: A law firm received an email from someone claiming to be a new partner at a client company, asking to update wire transfer instructions for an upcoming retainer payment.

Legacy security analysis: Legitimate domain ✓, valid email authentication ✓, perfect grammar ✓, no suspicious links ✓, no known malicious patterns ✓. Delivered to inbox.

But pattern-matching can't evaluate that the "new partner" didn't exist in LinkedIn, company directory, or state bar records. It can't assess that CFOs—not partners—handle payment updates in that industry. It can't recognize that law firms have documented procedures for banking changes that don't involve email. It can't detect that the urgency language contradicted the sender's claimed role.

These aren't patterns you can train on. They're reasoning about business context, organizational hierarchies, and whether the email's premise makes sense.

The Speed Asymmetry

Even if you could continuously retrain ML models on new attack variants, you're fundamentally playing defense in a game where offense has infinite moves.

The math is brutal:

- Attacker with LLM: Generate 10,000 unique phishing variants in 30 minutes

- Security vendor with pattern-matching: Collect samples, label them, retrain model, deploy—best case is 2-3 weeks

You're always training on last month's attacks while this month's attacks bypass you entirely. This is why false positive rates are exploding at legacy vendors. Broaden patterns to catch more variants? You flag legitimate emails. Tighten patterns to reduce false positives? You miss novel attacks. There's no tuning out of this structural problem.

Why "AI-Powered" Features Aren't Enough

Most legacy vendors saw this coming and added "AI features"—using LLMs to analyze emails that pattern-matching flagged, consulting LLMs as a "second opinion" module, generating better phishing simulations for training.

But this still depends on pattern-matching as the first filter. If the pattern-matcher misses the email, the LLM never sees it. That's not a solution. That's a band-aid on an architectural wound.

Generation 3: Reasoning Over Patterns

At StrongestLayer, we built TRACE (Threat Reasoning AI Correlation Engine) to ask fundamentally different questions. Not "does this match a known bad pattern?" but "does the reasoning behind this email make sense?"

Traditional pattern-matching stack: Extract features → compare to database → score similarity → block if threshold exceeded.

TRACE (Threat Reasoning AI Correlation Engine) reasoning stack: Analyze email intent → query organizational context (relationships, roles, processes) → evaluate whether email's reasoning holds up → provide explanation of decision.

The key difference: we can detect threats we've never seen before because we're not looking for patterns—we're evaluating whether the email makes sense given business context.

The Production Results

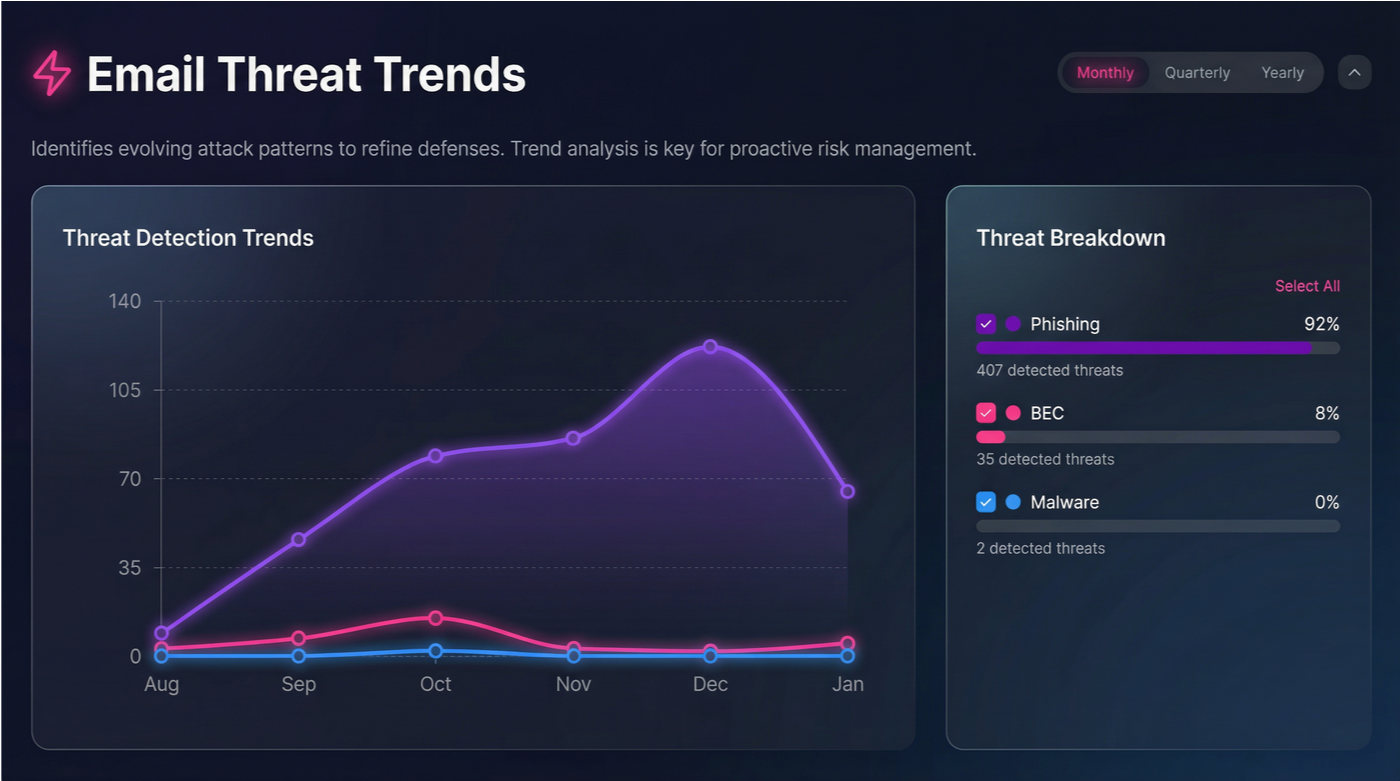

Our detection metrics in regulated industries (law firms, pharma, financial services):

- 80% reduction in false positive investigations

- Catching AI-generated BEC attacks that bypassed Proofpoint and Mimecast

- Sub-200ms analysis latency even for complex reasoning

- 1% false positive rate on novel attacks vs. 15-20% industry average

But here's what matters for CISOs: every decision comes with an explanation. TRACE (Threat Reasoning AI Correlation Engine) doesn't say "malicious score: 87." It says: "Blocked because CFO role doesn't normally request wire transfers via email + request bypasses documented vendor payment process + urgency language inconsistent with sender's typical communication style."

That's auditable. That's defensible when your GC asks questions. That's the difference between pattern-matching and reasoning.

The Uncomfortable Truth

Legacy vendors can't rebuild their architecture without abandoning their existing codebases, retraining their entire detection teams, and essentially starting over. They're selling AI features because they can't sell AI architecture.

For CISOs evaluating email security in 2026, ask these questions:

- Does your LLM orchestrate the entire detection process, or just consult as a module?

- Can you detect malicious intent regardless of whether you've seen this attack variant before?

- How do you maintain performance at enterprise scale without relying on pattern-matching?

- Can you explain your decisions in terms a compliance officer would understand?

If your current vendor can't answer these clearly, you're not protected against AI-era threats. Pattern-matching was brilliant for the problems of 2005. But the adversary evolved. The question is whether your security architecture will evolve with it.

Subscribe to Our Newsletters!

Be the first to get exclusive offers and the latest news

Don’t let legacy tools leave you exposed.

Tomorrow's Threats. Stopped Today.

.svg)