What 18 Months of Security Evaluations Taught Me About Buyer Priorities in 2026

I spent years at McAfee and Proofpoint before joining StrongestLayer. I thought I understood what OEM and enterprise security buyers needed—after all, I’d seen the playbook work for two of the category leaders.

Then I moved to the startup side. In just six months, the conversations I’ve had with CISOs, compliance officers, and security teams have shown me something striking: the priorities that drove purchasing decisions then aren’t the priorities driving them today.

The market has shifted faster than I expected. And many vendors—are still selling to 2019 buyers.

Here’s what I’m seeing.

The False Positive Crisis Is Real

The most consistent theme across every conversation: security teams are drowning in noise.

One pharmaceutical company I spoke with was investigating 200+ security alerts per week. When we analyzed their data together, 190 were false positives. That’s 95% of their security team’s time spent validating legitimate emails.

The math is brutal: 15 hours per week per analyst chasing false alarms. At loaded cost, that’s $150K-200K annually per person in wasted investigation time. And that’s before you factor in the opportunity cost—threats they’re not hunting because they’re buried in false positive triage.

But here’s what surprised me: these teams don’t blame their security vendors. They assume false positives are just the cost of doing business. They’ve internalized that email security means choosing between missing threats and investigating hundreds of legitimate emails.

This assumption is wrong. But it’s so deeply embedded that buyers don’t even ask vendors about false positive rates during evaluations. They ask about detection capabilities, threat intelligence, and integration—but not accuracy.

The insight: False positive reduction isn’t a feature request. It’s an unspoken crisis that buyers have accepted as unsolvable. Vendors who can demonstrate measurably better accuracy—not just “improved” but 10x better—are solving a problem buyers didn’t know was solvable.

Compliance Is Driving Scurity Decisions

I expected CISOs to lead security evaluations. What I didn’t expect: how often General Counsels, Chief Compliance Officers, and Heads of Risk are in the room—and how often they have veto power.

In regulated industries, email breaches aren’t just security incidents. They’re regulatory events that trigger mandatory reporting, client notifications, potential fines, insurance claims, and board-level escalations.

This changes the evaluation criteria fundamentally.

CISOs evaluate: Can you catch sophisticated attacks?

Compliance evaluates: Can you prove to regulators that your controls actually work?

The difference matters. Pattern-matching systems show “threats blocked” dashboards. But when a compliance officer asks “Can you show me why you blocked this specific email in terms an auditor will understand?” most vendors show scores, not reasoning.

“This email scored 87 out of 100 for malicious intent” doesn’t satisfy regulatory examination. “This email was blocked because the CFO doesn’t normally request wire transfers and the request bypassed your documented approval process” does.

The insight: In regulated industries, explainability isn’t a nice-to-have. It’s table stakes. Vendors who can’t provide audit-ready reasoning for every decision are creating compliance risk, not solving it.

Competitive Displacement Is The Dominant Motion

Almost no one I talk to is buying email security for the first time. They’re already spending $45K-200K annually on Proofpoint, Mimecast, or Abnormal Security.

The conversation isn’t “Do we need email security?” It’s “Is our current solution actually working?”

What’s driving re-evaluation? Not features. Not threat intelligence updates. It’s breach near-misses.

A law firm runs Mimecast. AI-generated BEC email impersonating a senior partner requesting urgent wire transfer for “confidential settlement” lands in the inbox. Their wire approval process catches it. But the email security didn’t.

That creates a reevaluation moment. Not because the buyer is dissatisfied with features—but because they have proof their security failed.

The insight: The trigger for competitive displacement isn’t feature comparison. It’s evidence of detection failure. Buyers need to see what their incumbent missed before they’ll consider alternatives. This is why proof-of-concept evaluations that run in parallel with existing solutions are so effective—they make the gap visible.

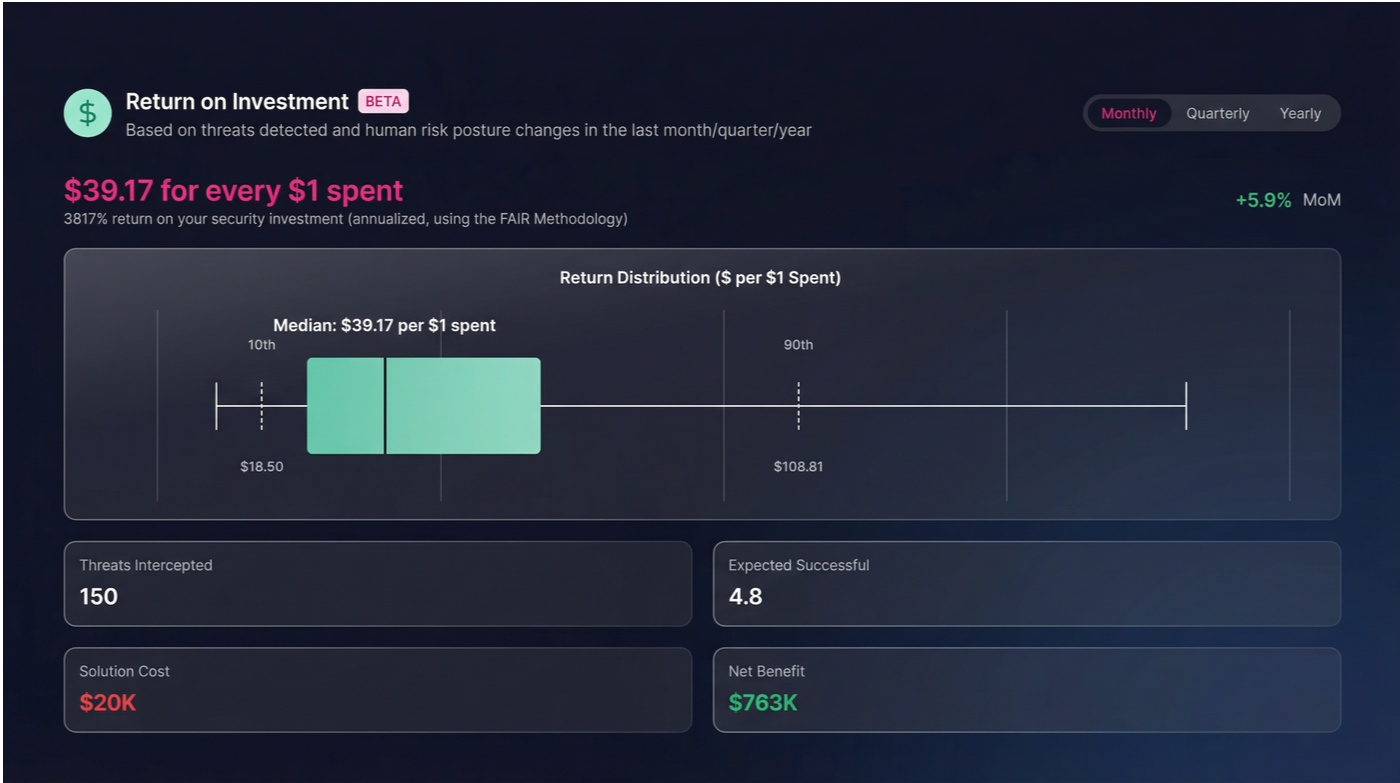

ROI Expectations Have Compressed

Three years ago, enterprise security buyers accepted 18-24 month ROI windows. Today, they want ROI within 12 months—often sooner.

Why the shift? Budget scrutiny post-2023. Every security investment now competes with headcount, product development, and customer acquisition spend.

This changes the ROI conversation. Buyers aren’t just calculating “What does this prevent?” They’re calculating “What does this save?”

The clearest ROI drivers I see:

Analyst time savings: If your security team spends 20 hours/week investigating false positives, and you can reduce that 80%, you’re saving $120K-160K annually per analyst. That’s measurable, immediate, and shows up in productivity metrics.

Avoided breach costs: Even “small” email breaches cost $500K+ in forensics, notifications, legal, remediation, and customer impact. Preventing one breach pays for the solution 3-5x over. But this ROI is hypothetical until you can show specific attacks your incumbent missed that you caught.

Compliance efficiency: Regulatory audits require security teams to document controls. If your system provides full reasoning for every decision, audit prep time drops 60-70%. That’s 40-60 analyst hours per audit cycle—direct cost savings.

The insight: Buyers need to see ROI hit within 12 months. The winners will be vendors who can show measurable operational savings (time, productivity, compliance burden) in addition to prevented breach costs.

Product Velocity Matters More Than Product Maturity

I used to think buyers in regulated industries prioritized vendor stability and product maturity. “Nobody gets fired for buying Proofpoint.”

That’s changing.

What I’m hearing now: frustration with vendor responsiveness. Feature requests that disappear into 18-month roadmaps. Custom detection rules that take 6-8 weeks to implement. Support tickets that escalate slowly.

Meanwhile, buyers are seeing attackers iterate in days. AI-generated attacks evolve weekly. Threat actors adapt to defenses in real-time.

The gap between vendor release cycles (quarterly, annually) and threat evolution (continuous) is becoming untenable.

Here’s a real example: During an evaluation, a financial services firm asked their incumbent vendor (a major SEG provider) for a custom detection rule related to a specific vendor impersonation pattern they were seeing. The vendor quoted 6-8 weeks for roadmap review, then 4-6 months for implementation.

The firm didn’t wait. They found a vendor who could ship it in days.

The insight: In the AI era, vendor velocity is becoming a competitive advantage. Buyers want partners who move at the speed of threats, not the speed of enterprise software release cycles. Startups who can demonstrate fast iteration are winning deals against incumbents who can’t.

What This Means For The Market

If I step back and look at these five patterns together, I see a fundamental shift:

The old playbook: Sell on threat detection capabilities, threat intelligence feeds, and integration breadth. Assume buyers accept false positives. Target CISOs exclusively. Compete on feature parity.

The new playbook: Lead with accuracy and explainability. Address compliance requirements as first-class concerns. Demonstrate competitive gaps with proof-of-concept evidence. Show operational ROI within 12 months. Prove you can iterate faster than threats evolve.

Buyers aren’t asking for incremental improvements. They’re looking for architectural differences that enable fundamentally better outcomes.

The vendors who understand this—and can educate buyers on why architecture matters—will define the next generation of email security.

About the Author:

Karen is Chief Commercial Officer at StrongestLayer, where she leads go-to-market strategy for AI-native email security. She works directly with security and compliance leaders in regulated industries to understand evolving buyer priorities.

Here’s a question that should make compliance officers uncomfortable: Can your email security explain its decisions in terms a regulator will understand?

Not “how many threats did you block?” That’s easy—every vendor has dashboards showing blocked email counts.

The harder question: “Why did you block this specific email? What made it malicious? Can you walk me through your reasoning in a way I can document for an audit?”

For most email security platforms, the answer is: No.

And that’s creating a compliance gap that regulated industries can’t afford to ignore.

The Regulatory Stakes Are Higher Than Ever

Let’s be clear about what’s at risk when email security fails in regulated industries.

For law firms: State bar breach notification requirements kick in immediately. Client privilege violations can destroy attorney-client relationships. Malpractice claims follow. Insurance premiums spike. Reputation damage in a profession built on trust can cost more than the breach itself.

For pharmaceutical companies: HIPAA violations start at $100 per record and can reach $50,000 per record. Annual caps max out at $1.5M, but that’s per violation category. FDA scrutiny on data integrity follows. Clinical trial data exposure compromises intellectual property. Research partnerships require notification. Patient trust erodes.

For financial services: SEC and FINRA enforcement actions for inadequate cybersecurity controls. SOX compliance failures that affect audit opinions. GLBA violations carrying civil and criminal penalties. State-by-state customer notification requirements. Credit monitoring obligations. Class action exposure.

These aren’t theoretical. These are automatic regulatory triggers the moment email security fails to prevent a breach.

Why Current Approaches Create Compliance Risk

Most email security platforms—Proofpoint, Mimecast, Abnormal Security—operate on fundamentally similar architectures: pattern-matching or ML-based statistical models.

From a compliance perspective, both approaches have the same problem: they can’t explain their decisions in business terms.

The Black Box Problem

When compliance officers or auditors ask “How does your email security work?” vendors typically show: - Dashboards with blocked threat counts - Threat intelligence feeds - “Malicious scores” from ML models

But when you dig deeper—“What made this email malicious?”—the answers get vague.

Pattern-matching systems point to the rule that fired. But rules can’t explain context. “This email matched pattern #4,782” doesn’t satisfy regulatory examination.

ML-based systems are worse. They generate probabilistic scores from neural networks trained on millions of emails. Even the engineers who built them can’t fully articulate why the model scored a specific email as 87/100 malicious.

The Audit Room Conversation

Picture this scenario during a regulatory examination:

Examiner: “Walk me through how your email security controls work.”

You: “We use AI-powered detection that analyzes hundreds of signals.”

Examiner: “Show me a specific example. Why was this email blocked?”

You: “Our ML model determined it was high-risk. Score of 87 out of 100.”

Examiner: “What does 87 mean? What factors contributed to that score?”

You: “The model evaluates multiple features. It’s a proprietary algorithm.”

Examiner: “Can you document which specific factors made this email suspicious?”

You: “The vendor doesn’t provide that level of detail. But the system is very accurate.”

This conversation happens in regulatory examinations across regulated industries. And it’s a compliance problem.

You can’t demonstrate that your controls work if you can’t explain how they make decisions.

The False Positive Compliance Dilemma

There’s a second compliance problem most organizations don’t talk about: false positives create their own compliance risk.

Pattern-matching and ML systems face an impossible trade-off: - Tighten detection rules → miss novel attacks → regulatory exposure from breaches - Broaden detection rules → flag legitimate emails → business disruption

In regulated industries, both outcomes create compliance problems.

Missing threats triggers breach notification, regulatory reporting, and all the consequences outlined above.

Blocking legitimate emails disrupts business operations in ways that create compliance and legal risk: - Law firms: Client communications delayed, deal documents quarantined, court filing deadlines missed - Pharma: Supply chain approvals blocked, clinical trial coordination interrupted, regulatory submission delays - Financial services: Trading notifications quarantined, client instructions delayed, settlement timing affected

I’ve seen this play out: A law firm’s email security quarantined a client’s DocuSign for a time-sensitive M&A transaction. The email arrived overnight. It sat in quarantine for 18 hours because no one checked quarantine folders outside business hours. The deal nearly collapsed. The client threatened to terminate the relationship.

When the firm’s managing partner asked “Why was this blocked?” IT couldn’t provide a clear answer. The security vendor said “It looked suspicious. You can whitelist DocuSign if you want.”

That’s not security. That’s risk transfer.

The AI-Generated Attack Problem

Here’s what’s making this worse: AI-generated attacks are specifically designed to bypass pattern-matching.

A pharmaceutical company shared this example with me during a security review:

Email impersonating their VP of Clinical Research. Sent to finance. Requesting urgent wire transfer for clinical trial site due to “unexpected regulatory filing costs.”

Their existing security platform (Proofpoint) analyzed it: - Sender domain: Legitimate (authentication passed) - Content: No match to known phishing patterns

- Links: None - Attachments: None - Grammar: Perfect - Verdict: Delivered to inbox

What actually happened: - The VP’s account wasn’t compromised - Attacker researched the company’s active clinical trials - Understood organizational hierarchy - Crafted contextually sophisticated email - Targeted someone with wire transfer authority

The attack almost succeeded. Finance had a policy requiring dual approval for wire transfers over $50K. The second approver called the VP to verify. Fraud discovered.

The compliance problem:

From a regulatory perspective, their email security failed. The attack reached an inbox. Their controls didn’t detect it. Only manual verification—luck and good process—prevented financial loss.

When this gets documented for SOX testing, board reporting, and regulatory filings, the organization has to explain: “Our email security missed it, but our payment approval process caught it.”

That’s not a security success story. That’s a control failure that happened to be backstopped by a different control.

What Compliant-By-Design Email Security Looks Like

Let me be clear: I’m not arguing that every email security platform is non-compliant. I’m arguing that explainability and auditability should be architectural requirements, not afterthoughts.

Here’s what that means in practice:

Requirement 1: Reasoning-Based Decisions

Instead of “malicious score: 87,” security decisions should provide business-logic reasoning:

“Blocked because:

- VP of Clinical Research doesn’t normally request wire transfers (role inconsistency with request type) - Request bypasses documented vendor payment approval process (policy violation)

- Urgency language inconsistent with sender’s typical communication patterns (behavioral anomaly) - Sender hasn’t previously initiated financial requests (historical pattern break)”

This is specific, verifiable, auditable, and understandable to non-technical stakeholders.

Requirement 2: Audit-Ready Documentation

Every security decision should generate documentation that satisfies regulatory examination: - What was detected - Why it was flagged - What business logic was violated - How the decision aligns with security policies

This isn’t just “nice to have” for compliance teams. It’s required for regulatory reporting, SOX testing, and breach investigations.

Requirement 3: Measurable Accuracy

Compliance teams need to document that controls work as designed. That requires measurable accuracy metrics: - False positive rate (not just spam, but sophisticated attacks) - Detection rate on novel attacks (threats never seen before) - Time to detection and remediation - Explainability rate (what % of decisions can be explained in business terms)

If your vendor can’t provide these metrics, you can’t demonstrate control effectiveness.

Requirement 4: Proactive Risk Identification

Instead of just blocking threats, security should identify risk patterns: - Employees interacting with high-risk senders - Departments with elevated social engineering exposure - Business processes that bypass security controls

- Third-party relationships with anomalous communication patterns

This provides compliance officers with data for risk assessments, evidence of continuous monitoring, and board-level reporting material.

The Real Cost Of “Good Enough”

Let me share a real example (details anonymized) of what happens when “good enough” email security fails in a regulated environment.

A law firm believed they were adequately protected with Mimecast. Then an AI-generated client impersonation attack bypassed their security, exposing privileged communications related to ongoing litigation.

The compliance cascade: - State bar notification: Required within 72 hours - Client notification and explanation: Contractually mandated - Forensic investigation: $125K to determine scope of exposure - Legal counsel for malpractice exposure: $200K+ - Cyber insurance claim and premium increase: 20% increase = $35K annually - IT remediation and security improvements: $180K - Client relationship damaged: One client moved to another firm = $400K annual revenue

Total documented cost: $940K+

The law firm’s annual Mimecast cost: $45K.

The breach cost 21x their security spend.

But here’s what makes this a compliance failure, not just a security failure: When regulators asked “How will you prevent this in the future?” the firm couldn’t provide a clear answer. Their security platform failed to detect the threat. They had to implement new controls, retrain staff, and upgrade systems—all while documenting why their existing controls were inadequate.

What Compliance Teams Should Ask Vendors

If you’re evaluating email security and compliance is a concern, here are the questions that matter:

- “Can you show me the reasoning behind this blocked email in terms I can document for an auditor?”

If they show scores without reasoning, it’s not auditable. - “How do you minimize false positives without creating detection gaps?”

If they can’t show measurable false positive rates on sophisticated attacks, you’ll be drowning your security team. - “Can you detect threats you’ve never seen before?”

If they rely on pattern-matching or historical training data, they can’t. - “What’s your documented accuracy on AI-generated attacks?”

If they don’t have specific metrics, they’re guessing. - “How do you help us meet regulatory reporting requirements?”

If they don’t have compliance-specific features, you’re on your own. - “Can you provide audit-ready documentation of your detection decisions?”

If they can’t, your compliance team will spend months reconstructing evidence.

The Bottom Line

Compliance risk isn’t separate from security risk. In regulated industries, they’re the same thing.

Email security that can’t explain its decisions creates regulatory exposure. Systems that generate high false positives create business disruption. Architectures that depend on pattern-matching can’t detect AI-generated attacks.

The question for compliance officers isn’t “Do we have email security?” It’s “Can our email security demonstrate to regulators that our controls actually work?”

If the answer is unclear, you have a compliance gap.

Subscribe to Our Newsletters!

Be the first to get exclusive offers and the latest news

Don’t let legacy tools leave you exposed.

Tomorrow's Threats. Stopped Today.

.svg)