Why Encryption Can No Longer Protect Law Firms in the Age of AI

Trust is the currency of the legal profession. When a partner sends an email to a client regarding a merger, or when an associate sends a discovery file to opposing counsel, the entire system relies on the assumption that the person sending the email is who they say they are.

For decades, we solved the "Trust" problem with "Math." We used encryption protocols (TLS, PGP, portal-based messaging) to ensure that no one could read the mail while it traveled from Point A to Point B. We built fortified tunnels and assumed the castle was safe.

But in late 2025, the rules changed. The enemy stopped trying to dig into the tunnel. Instead, they used AI to forge the keys.

Karen L., Chief Commercial Officer at StrongestLayer, recently highlighted a critical vulnerability that is keeping CISOs awake at night:

"Attorney-client privilege remains the cornerstone of legal practice. In 2026, protecting that privilege requires more than encryption—it requires reasoning-based security architecture that understands the difference between legitimate legal communications and sophisticated impersonation attempts."

This article explores why the "Encryption Era" is ending, why "Pattern Matching" is failing, and how Law Firms must pivot to AI-Native Foundations to survive the next generation of threats.

Part 1: The New Threat (Entities: Time & Deception)

To understand why your current security stack is failing, you have to look at the Entities involved in a modern attack: The Time it takes to launch, and the Quality of the deception.

The Compression of Threat Timelines

In the past, crafting a convincing spear-phishing campaign against a high-value target (like a Senior Partner at a Magic Circle firm) took weeks. Attackers had to research the target, scrape public data, and manually write emails.

Today, that timeline has collapsed. According to recent market data, threat timelines have compressed from 23 days to just 2 hours.

Malware and phishing templates now regenerate every 15 seconds. This means that by the time a "Signature-Based" security tool has identified a threat and updated its blacklist, the attacker has already changed the code, the hash, and the domain. You are fighting a machine gun with a notepad.

The "Perfect" Impersonation

The second shift is linguistic. Generative AI tools (LLMs) have mastered the specific "dialect" of the legal profession.

- Old Phishing: "Dear Sir/Madam, kindly remit payment for invoice." (Easy to spot).

- AI Phishing: "Hi Sarah, just reviewed the redlines on the IP agreement. Section 4 looks risky. Can you check this draft before I call the client?" (Indistinguishable from reality).

These attacks do not use malicious links (which firewalls catch). They use Social Engineering (which firewalls ignore). They prey on the human element, leveraging the high-pressure, fast-paced environment of a law firm where saying "no" to a partner is difficult.

Part 2: The Encryption Fallacy

There is a dangerous misconception in the legal sector that "Encrypted = Secure." This is what we call the Encryption Fallacy.

Karen L. puts it bluntly:

"While encryption protects content in transit, it doesn't verify sender authenticity or detect social engineering in encrypted messages."

The Armored Car Analogy

Imagine you hire an armored car to transport a briefcase.

- Encryption ensures the armored car is bulletproof. No one can steal the briefcase while it is driving.

- Authenticity is checking who put the briefcase in the car in the first place.

If a criminal hands the driver a briefcase containing a bomb, the armored car will deliver that bomb safely and securely to your front door. The "security" of the car (encryption) actually worked against you, because it ensured the threat arrived without interference.

In a law firm, if an attacker impersonates a client and sends a fraudulent wire instruction via an encrypted email channel, your "Security" tools will happily deliver it because the encryption protocols were followed perfectly. The pipe was secure, but the water was poisoned.

Part 3: The Economic Impact of "Legacy" Paranoia

When security teams realize their tools can't catch these AI threats, they usually react in one way: Paranoia.

They crank up the sensitivity of their Secure Email Gateways (SEGs). They create strict rules: "Flag any email with an attachment from outside the organization." "Quarantine any email that uses urgent language."

This creates a massive amount of "Noise"—false positives that drown security teams in busywork.

Case Study: The Global 50 Law Firm

We recently conducted a deep-dive audit of a Global 50 law firm to measure the cost of this "Legacy Paranoia." The numbers were staggering.

Because their traditional security controls couldn't distinguish between a real client urgency and a fake phishing urgency, the system was flagging legitimate business emails as threats.

- The Metric: The firm's analysts spent 160+ hours per quarter investigating emails that turned out to be perfectly safe.

- The Cost: At standard analyst rates, this amounted to $24,000 wasted every quarter.

That is nearly $100,000 a year spent not on fighting attackers, but on fighting your own software.

And this is just the direct cost. The indirect cost—partners waiting for emails that are stuck in quarantine, missed filing deadlines, disrupted deal flows—is likely 10x higher. In the legal world, time is literally money (billable hours). A security system that slows down the firm is a liability, not an asset.

Part 4: The Architectural Shift (Gen 1 vs. Gen 3)

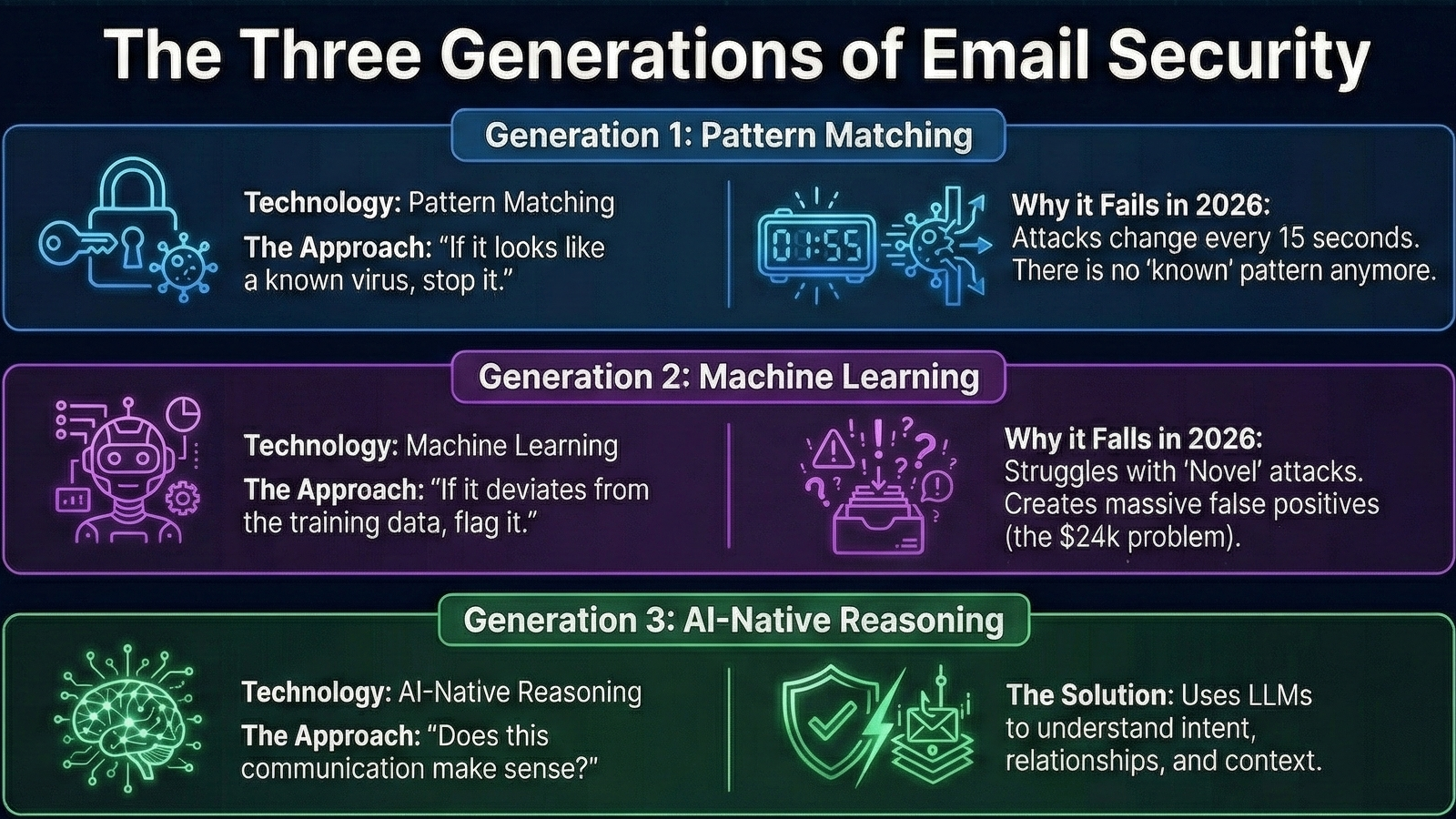

If "Better Detection" is failing, and "Stricter Rules" are too expensive, what is the solution? We are witnessing a "Category Redefinition." We are moving from Gen 1 & 2 (Detection) to Gen 3 (Architecture).

Defining "Reasoning-Based Security"

Karen L. notes that protecting privilege requires a "reasoning-based security architecture."

What does this mean?A Reasoning Engine doesn't just scan for keywords. It understands the Entities and their connections.

- The Context Check: The engine knows that Attorney John represents Client Corp. It knows they usually trade drafts on Tuesdays.

- The Anomaly: When an email comes in from Client Corp on a Saturday asking for an urgent wire transfer to a new bank account, the Reasoning Engine spots the disconnect.

- It's not a virus.

- The link isn't bad.

- But the Relationship Logic is broken.

This is how you stop AI phishing: You fight AI with AI. You use a "Good LLM" to analyze the semantic patterns of the "Bad LLM."

Part 5: How to Evaluate Your Security Stack

If you are a CISO at a law firm, you need to stop asking "What is your catch rate?" and start asking architectural questions.

Based on the StrongestLayer Framework, here are the three metrics you should demand from your vendors:

1. Detection Completeness (Can it see the lie?)

Does the tool rely on "Rules" (which are easily broken) or "Behavioral Understanding"? Can it detect a text-only social engineering attack that has no payload?

2. Accuracy Without Chaos (Can it reduce the noise?)

Ask for the False Positive rate. If a vendor boasts high detection but floods your SOC with 160+ hours of investigation time, they are not a security solution; they are a resource drain. Gen 3 tools reduce the noise by understanding context.

3. Speed of Correction (How fast can it fix a failure?)

This is the most critical and overlooked metric.

"We often focus so much on prevention that we forget how fast systems need to react when they fail."

When an attack does get through (and in the age of AI, 100% prevention is a myth), how fast can the system remediate it?

- Old Standard: Hours or Days (Analyst review).

- New Standard: Seconds (AI-driven auto-remediation).

Final Thoughts: Protecting the "Crown Jewels"

For law firms, the "Crown Jewels" are not just the bank accounts—they are the secrets. The litigation strategies, the M&A roadmaps, the private confessions of clients.

Attorney-Client Privilege is a sacred trust. But in 2026, you cannot uphold that trust with tools built for 2015.The attackers have modernized. They have moved from "Digital Burglary" (hacking) to "Digital Con Artistry" (impersonation).

The data is clear:

- 50% of security pros are fooled by AI phishing.

- Legacy tools are wasting $24,000 a quarter in false positives.

- Threats are moving faster than human analysts can think.

The only way forward is a fundamental shift to AI-Native, Reasoning-Based Architecture. It is time to stop polishing the armor and start upgrading the brain inside it.

Frequently Asked Questions (FAQ)

Q1: Why is encryption no longer enough to protect attorney-client privilege?

Encryption (TLS) only protects data in transit, ensuring no one intercepts the message. However, it does not verify the authenticity of the sender. In 2026, cybercriminals use AI to impersonate trusted clients or partners within encrypted channels. If the sender is an imposter, encryption simply ensures the fraudulent message arrives safely.

Q2: What is Reasoning-Based Security Architecture?

Reasoning-Based Security (Gen 3) is a new defense architecture that uses Large Language Models (LLMs) to analyze the context, intent, and relationships within an email. Unlike legacy tools that look for known bad links (Pattern Matching), a reasoning engine understands that a request is "anomalous" based on historical communication patterns, effectively stopping AI-driven social engineering.

Q3: How much do false positives cost law firms?

Legacy security tools often create "noise" by flagging legitimate business emails as threats. Data from StrongestLayer shows that a typical Global 50 law firm wastes over 160 analyst hours per quarter investigating false positives, costing approximately $96,000 annually in lost productivity per client deployment.

Q4: Can AI-generated phishing emails bypass Secure Email Gateways (SEGs)?

Yes. Traditional SEGs rely on "signatures" (known bad code) or "blacklists" (known bad domains). Since AI tools can generate unique, text-only phishing emails that contain no malicious payloads or links, they bypass standard detection filters. Recent studies show AI phishing now fools security professionals 50% of the time.

Q5: What is the difference between Gen 2 and Gen 3 email security?

Gen 2 (Machine Learning) identifies threats by comparing them to training data; it struggles with novel, zero-day attacks. Gen 3 (AI-Native) uses reasoning to understand the meaning of the communication. The shift from Gen 2 to Gen 3 is described as a move from "better detection" to "architectural resilience."

Subscribe to Our Newsletters!

Be the first to get exclusive offers and the latest news

Don’t let legacy tools leave you exposed.

Tomorrow's Threats. Stopped Today.

.svg)