The Liveness Illusion: Why Identity is Not the Same as Intent in 2026

For years, the cybersecurity industry has chased the "Holy Grail" of identity: Biometrics.

We were told that if we could just prove a user was "live" through a thumbprint or a face scan, the enterprise would be impenetrable.

But in 2026, that promise has been broken. The rise of Real-Time Generative Deepfakes has turned "Liveness" into an illusion. Today, an attacker can synthesize a CEO’s face and voice with such precision that traditional biometric checks are bypassed in milliseconds.

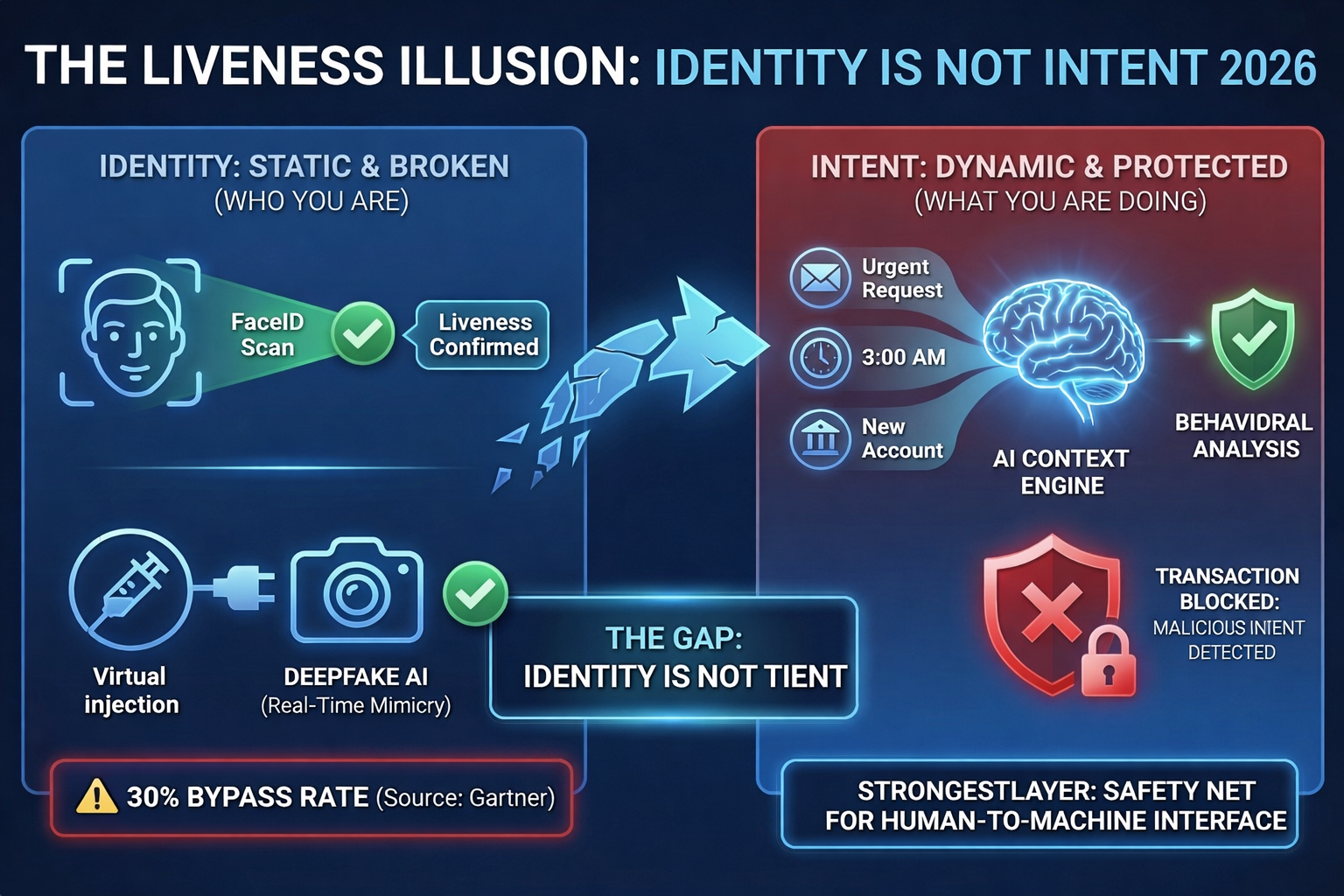

The crisis we face isn't just a failure of technology; it's a failure of philosophy. We have spent billions verifying who someone is, while completely ignoring what they are doing.

This report explores the "Identity-Intent Gap" and why the future of security lies not in scanning faces, but in understanding the context of communication.

The Death of the "Blink Test"

Only two years ago, "Liveness Detection" was considered foolproof. Security systems would ask a user to blink, turn their head, or say a random phrase to ensure they weren't a static photo or a pre-recorded video.

In 2026, those tests are a relic of the past.

The Rise of Real-Time Injection

Modern attackers no longer hold a phone up to a screen. They use Virtual Camera Injection. By feeding Generative AI streams directly into the video buffer of a Zoom, Teams, or MFA application, they bypass the physical camera entirely.

The AI doesn't just "look" like the person; it reacts. It blinks when the system asks. It smiles. It mimics the unique micro-expressions of the target. To the biometric engine, the user is "Live." To the business, the user is a ghost.

The "Identity Snapshot" Problem

Biometrics are, by definition, a snapshot. They verify a single moment in time—the login.

- You scan your face.

- You are granted access.

- The biometric "guard" goes home.

Once the session is open, the biometric security is gone. If that session is hijacked through a token theft or an adversary-in-the-middle attack, the attacker inherits the "Trusted Identity" without ever having to show their own face. Relying on biometrics is like checking a driver's license at a toll booth but never checking if the driver is actually steering the car into a wall.

The Identity-Intent Gap

This is the core of the StrongestLayer philosophy. To secure a modern organization, we must recognize that Identity ≠ Intent.

Defining the Gap

- Identity is static. It answers the question: "Are you who you say you are?" (FaceID, Fingerprints, Passports).

- Intent is dynamic. It answers the question: "Does what you are doing make sense for the business?"

The CFO Scenario

Imagine your CFO is working from a hotel in London. They log into the financial portal using a state-of-the-art FaceID scan. The identity is verified.

Five minutes later, that "verified" CFO sends an urgent request to the accounts team to wire $150,000 to a "new strategic partner" in Singapore.

The Biometric View: The system is happy. The user is logged in. The identity is "Safe."

The Reality View: The request is a disaster. The "partner" is a shell company. The "CFO" is actually a high-fidelity Deepfake operating through a hijacked session.

The biometric check was successful, but the security outcome was a total failure because the system could not "see" the malicious intent behind the legitimate identity.

The Productivity Tax of "Identity Obsession"

When organizations realize biometrics are being bypassed, their instinct is to add more biometrics.

We see this today with "Step-up Authentication" where an employee has to scan their face every time they want to open a sensitive document or send an email. This creates what we call Security Friction.

The Paradox of Choice

As we discussed in our research on the Security Paradox, high friction does not lead to higher safety. It leads to Shadow IT.

- If an employee has to scan their face 20 times a day just to do their job, they will start moving sensitive conversations to WhatsApp or personal Gmail to avoid the "hassle."

- They bypass the "secure" identity layer entirely because it prevents them from being productive.

By obsessing over Identity, we have made it harder for our own employees to work than it is for a hacker to automate a deepfake.

Why "Context" is the New Identity

If we can no longer trust the face on the screen, what can we trust? The answer is Context.

In 2026, "Trust" must be earned continuously, not granted in a single snapshot. This is where the communication layer becomes the most important defense in your stack.

1. The Story Matters More Than the Face

Instead of asking "Is this Martin’s face?", a context-aware system asks:

- "Does Martin usually send requests at 3:00 AM?"

- "Is Martin’s tone of voice in this email consistent with his previous 500 messages?"

- "Why is Martin asking to change bank details for a vendor we’ve worked with for 10 years?"

2. Behavioral Intent as a Shield

StrongestLayer focuses on the Intent Layer. By analyzing the nuances of communication—the language, the timing, the metadata, and the historical relationship—we can spot a "Liveness Lie" even when the biometric check passes.

AI can mimic a face, but it struggles to mimic a relationship. It doesn't know the inside jokes, the specific shorthand, or the "unwritten rules" of a specific team. Context-aware security looks for these gaps. When the "intent" doesn't match the "identity," the system intervenes.

The 2027 Roadmap for the Resilient CISO

The goal is not to delete your biometric tools. The goal is to stop treating them as a Silver Bullet. As we move into 2027, a resilient security strategy must include three specific shifts:

I. From "Login Security" to "Action Security"

Don't just secure the front door. Secure the high-value actions. If a user tries to move money, change a password, or export a customer list, the system should evaluate the context of that request in real-time, regardless of how they logged in.

II. Breaking the Silence Spiral

As our research shows, 50% of employees are afraid to report security mistakes. If an employee suspects a "Deepfake" Zoom call but is afraid to speak up because they might be wrong, the attacker wins. You must build a culture where "Contextual Suspicion" is rewarded, not punished.

III. Investing in Invisible Security

The best security is the kind that doesn't require a face scan. By using AI to monitor the "Communication Fabric" of the company, StrongestLayer provides protection that is invisible to the user but impossible for the attacker to bypass. ---

Final Thoughts: Trust the Pattern, Not the Person

In the age of the Deepfake, the eyes can be deceived. The ears can be tricked. The biometric sensor can be fed a lie.

The only thing that remains difficult to fake is the pattern of behavior.

The "Liveness Lie" has taught us a valuable lesson: Identity is just a label. Intent is the truth. Organizations that continue to rely solely on biometrics are building their fortresses on sand. The organizations that will survive 2026 and beyond are those that look past the "Face" and start securing the Context.

At StrongestLayer, we aren't interested in who you say you are. We are interested in making sure that what you do is safe for the business. Because in a world of perfect fakes, context is the only thing that’s real.

Frequently Asked Questions (FAQ)

Q1: Can Deepfakes really bypass "Liveness" checks?

Yes. By 2026, real-time generative AI can mimic head movements, blinking, and speech patterns with high enough fidelity to fool most commercial biometric engines. Furthermore, "Virtual Camera Injection" allows attackers to bypass the physical camera entirely, feeding a perfect digital fake into the stream.

Q2: Is Biometric security still useful?

It is useful as a "first hurdle" to stop low-level attackers, but it should never be the sole source of trust. It is a tool for Access, not a tool for Security.

Q3: What is Intent-Based Security?

Intent-based security (or Context-Aware Security) is an approach that analyzes the purpose and context of a communication or action. Instead of just checking if a user is logged in, it asks if the specific request (like a wire transfer or data export) is consistent with that user’s historical behavior and business role.

Q4: How does StrongestLayer help with Deepfakes?

StrongestLayer protects the communication layer. Even if an attacker uses a Deepfake to get past an initial login, StrongestLayer monitors the ongoing "intent" of the conversation. If the attacker tries to manipulate an employee or initiate a risky transaction, the system identifies the behavioral anomaly and blocks the threat.

Subscribe to Our Newsletters!

Be the first to get exclusive offers and the latest news

Don’t let legacy tools leave you exposed.

Tomorrow's Threats. Stopped Today.

.svg)